Heartwarming Tips About How To Avoid Multicollinearity

Depending on the goal of your regression analysis, you might not actually need to resolve the multicollinearity.

How to avoid multicollinearity. You can also try to combine or. To do so, click on the analyze tab, then regression , then linear : X and x^2 tend to be highly correlated.

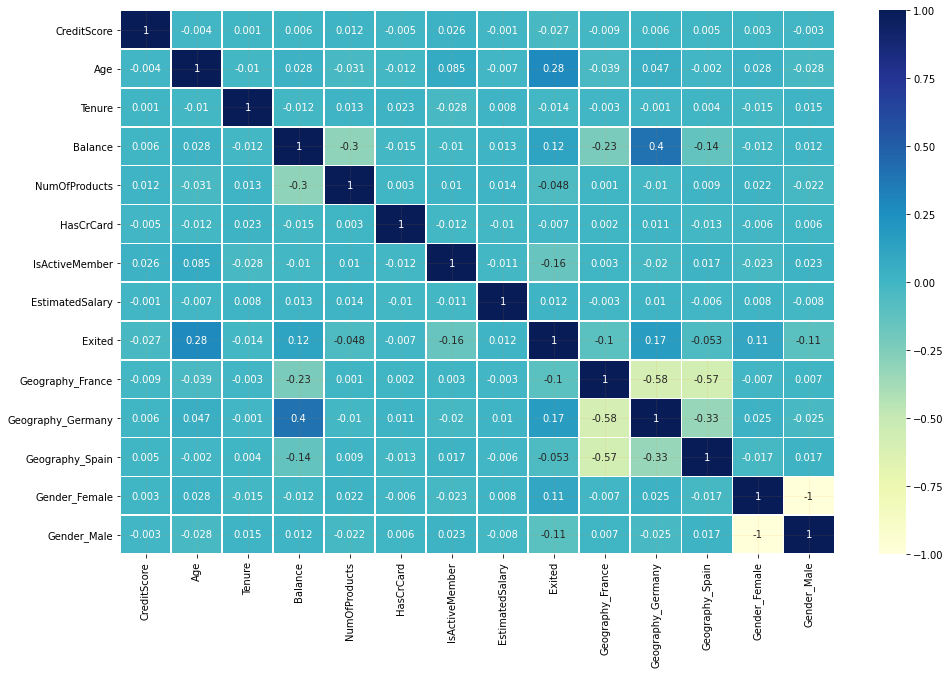

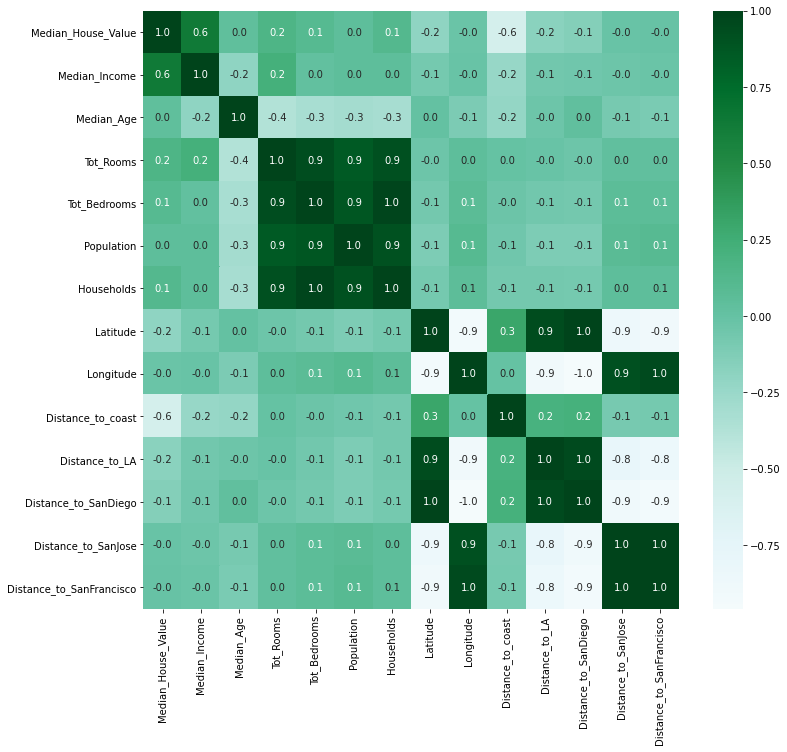

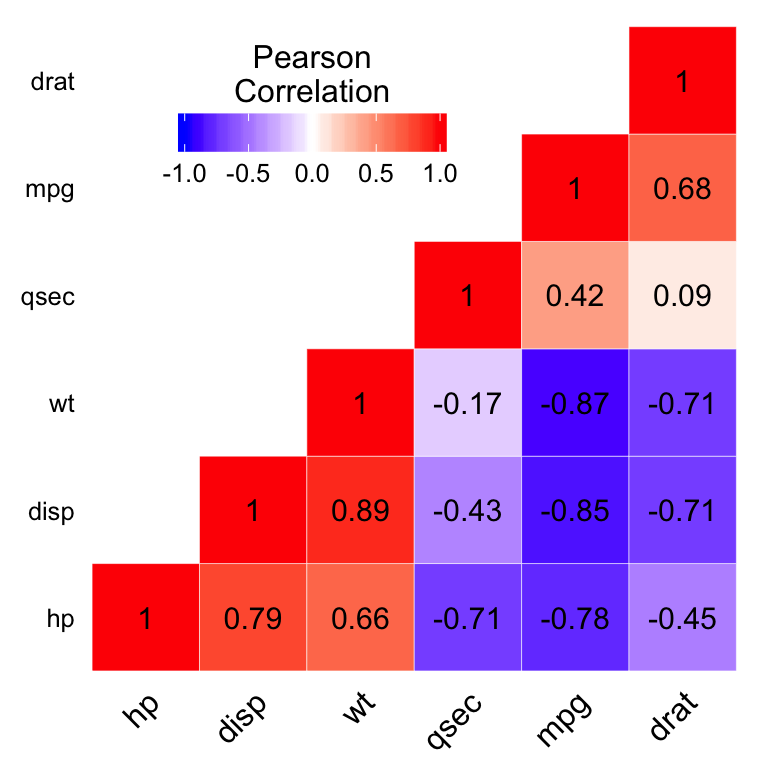

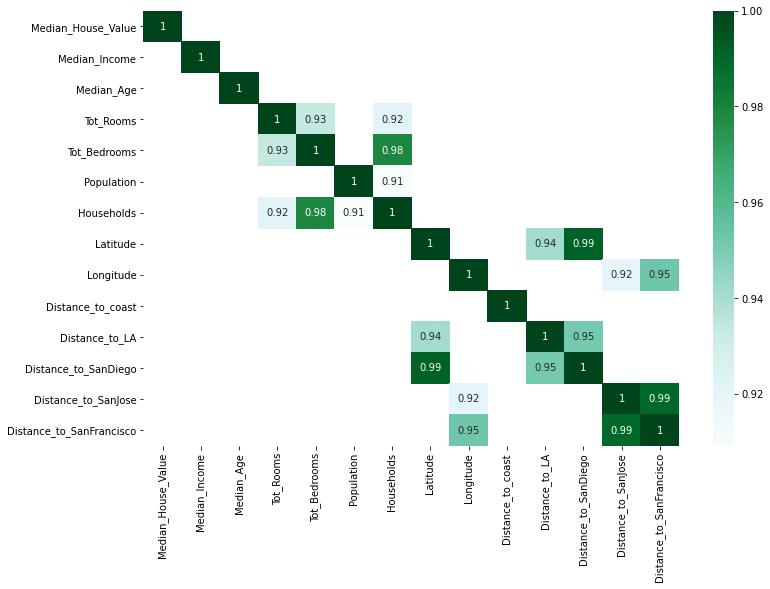

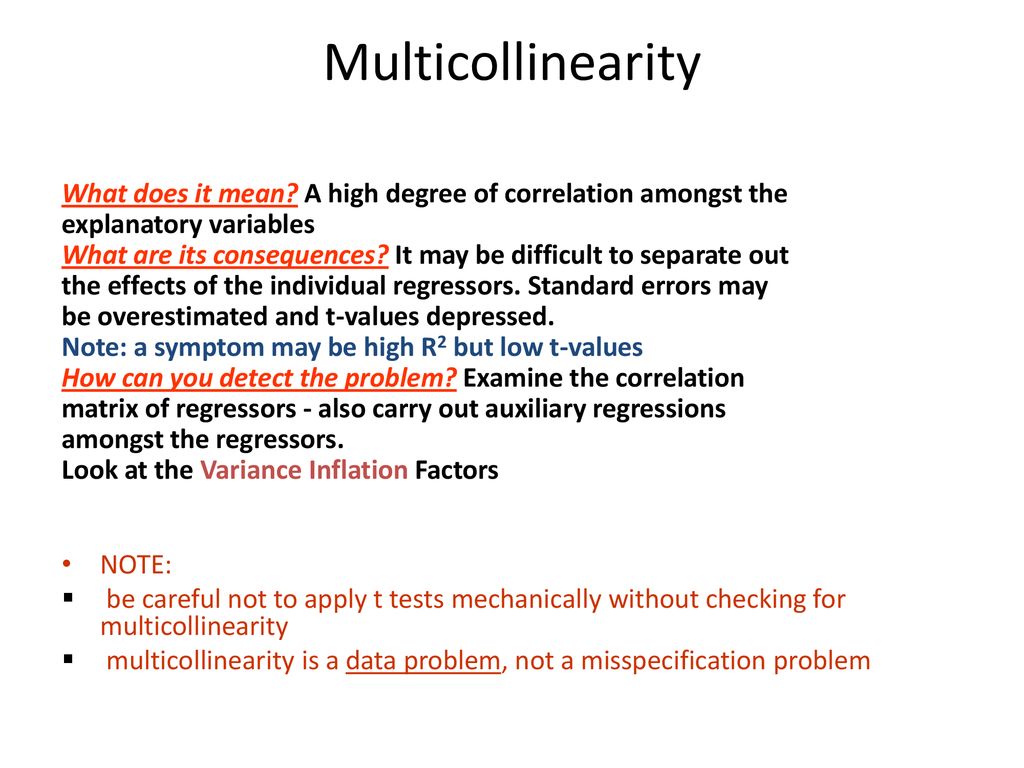

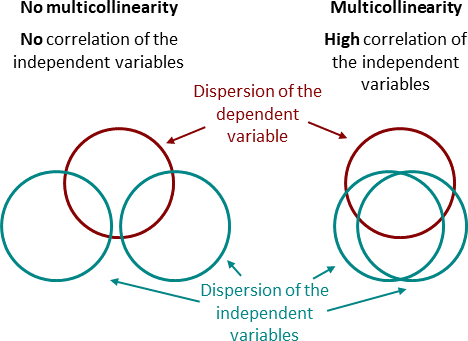

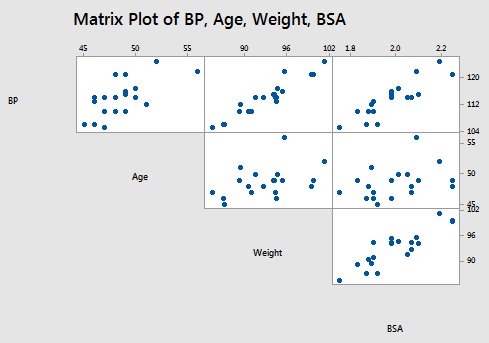

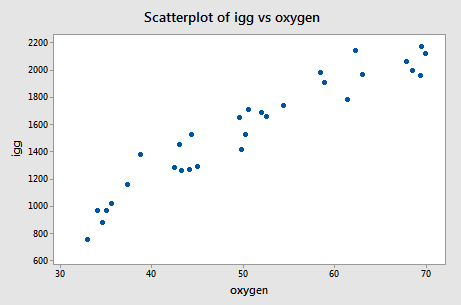

Multicollinearity refers to a situation in which two or more explanatory variables in a multiple regression model are highly linearly related. In general, there are two different methods to remove multicollinearity — 1. Fortunately, it’s possible to detect multicollinearity using a metric known as the variance inflation factor (vif), which measures the correlation and strength of correlation.

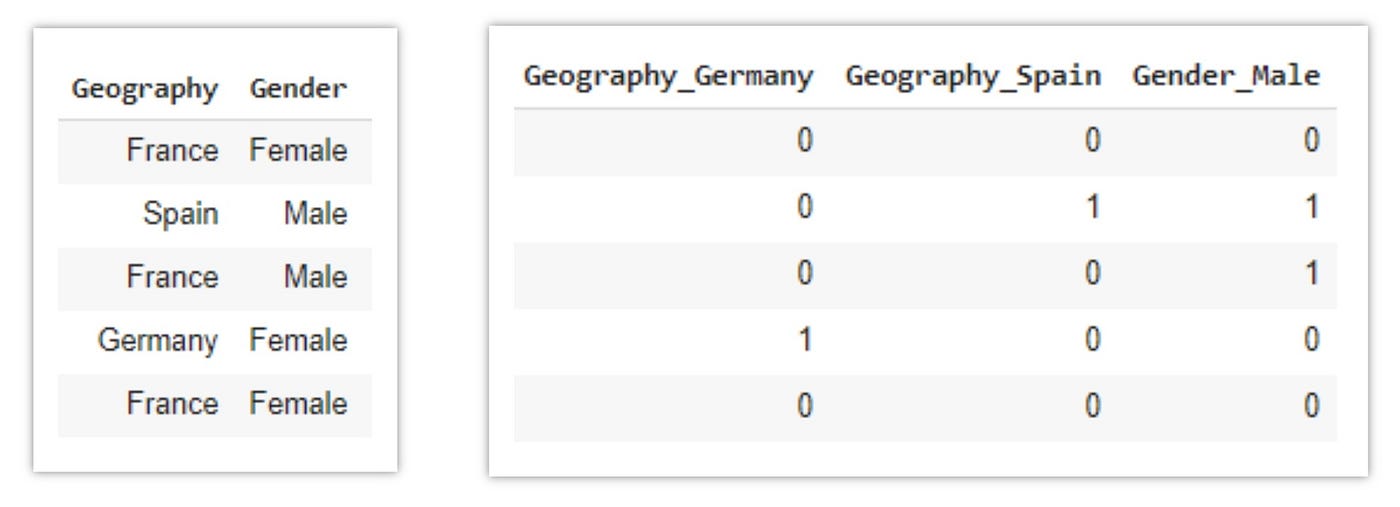

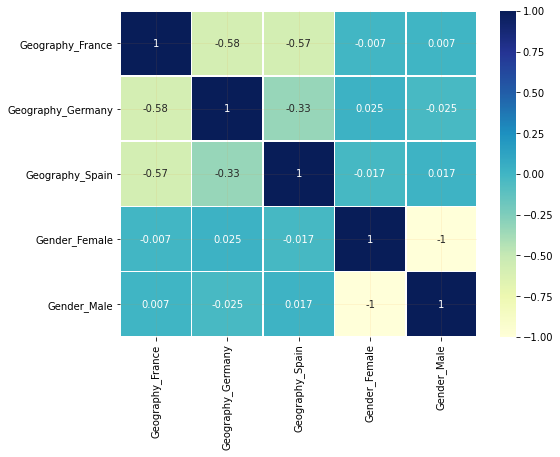

In this tutorial, we will walk through a simple example on how you can deal with the multi. Pd.get_dummies silently introduces multicollinearity in your data. There are multiple ways to overcome the problem of multicollinearity.

Hence, greater vif denotes greater correlation. [this was directly from wikipedia]. You may use ridge regression or principal component regression or partial least squares regression.

A special solution in polynomial models is. To reduce the amount of multicollinearity found in a model, one can remove the specific variables that are identified as the most collinear. To determine if multicollinearity is a problem, we can produce vif values for each of the predictor variables.

This is in agreement with the fact that a higher.